How to deploy OPNFV Colorado 3.0 with VOSYSwitch

OPNFV requirements, integration of an high performance virtual switch

Open Platform for NFV (OPNFV) project aims to be a carrier-grade, integrated platform that simplify the introduction of products and services into the networking industry. It is built upon requirements and consensus of industry leaders to refine NFV capabilities and to provide a more standard and interoperable solution. The platform establishes an ecosystem for Networking Functions Virtualization (NFV) solutions based on open standards and software to better meet needs and services provisioning to end users.

In this context, a key component to preserve easiness of the deployment and an high performance is without any doubt represented by the selected virtual switch. For this reason, Virtual Open Systems guides the reader to the integration into OPNFV with VOSYSwitch, an high performance and inter-architecture (x86 and ARMv8) user space virtual switch.

1. Integration of VOSYSwitch in OPNFV as a L2 forwarding dataplane

This guide shows how to install the user space virtual switch VOSYSwitch within OPNFV. VOSYSwitch is used as a layer 2 forwarding dataplane in an OpenStack Neutron networking.

The available online guides related to the installation of OPNFV describe installation procedures for either a set of VMs or a baremetal environment. This guide provides a solution that uses a mixed environment, including both a virtualized and baremetal host. The proposed scenario shows an example where the management roles are running as virtual machines, whereas the nodes with compute roles responsible for hosting the instances, are deployed on baremetal machines. This is a more flexible approach to deploy OPNFV, as the user can dedicate physical resources to specific tasks, while running the less resources demanding roles as virtualized instances.

At the end of the guide a performance comparison between an OPNFV setup running VOSYSwitch and one using OVS-DPDK is presented, under the same test environment. Here it's presented an excerpt of the full performance table, which is shown in the relevant section at the end of this guide.

It is clearly demonstrated how the VOSYSwitch solution outperforms OVS-DPDK, to provide a superior wire speed connectivity for the VMs. In addition, VOSYSwitch simple integration within OPNFV provides operators, services providers and integrators with an outstanding solution to enable carrier grade production deployments. In addition, the easy deployment of VOSYSwitch in heterogeneous cloud and telecommunication datacenters, supporting both x86 and ARMv8 server architectures, finally resolves the interoperability limitations of other available vSwitch market solutions. Moreover, VOSYSwitch has been proven to sustain higher performance when using VLAN isolated multi-tenant networking; it outperforms in both inter-host and same host scenarios, when using iperf with realistic TCP pattern.

Scenario Parallel threads MTU size OVS-DPDK VOSYSwitch Same host 1 1500 2.14 Gbps 10.3 Gbps Inter-host 1 1500 4.88 Gbps 9.20 Gbps

2. OPNFV infrastructure setup configuration

The infrastructure setup is based on the latest available OPNFV Colorado 3.0 distribution, and it follows the guidelines given in the OPNFV Pharos hardware specifications.

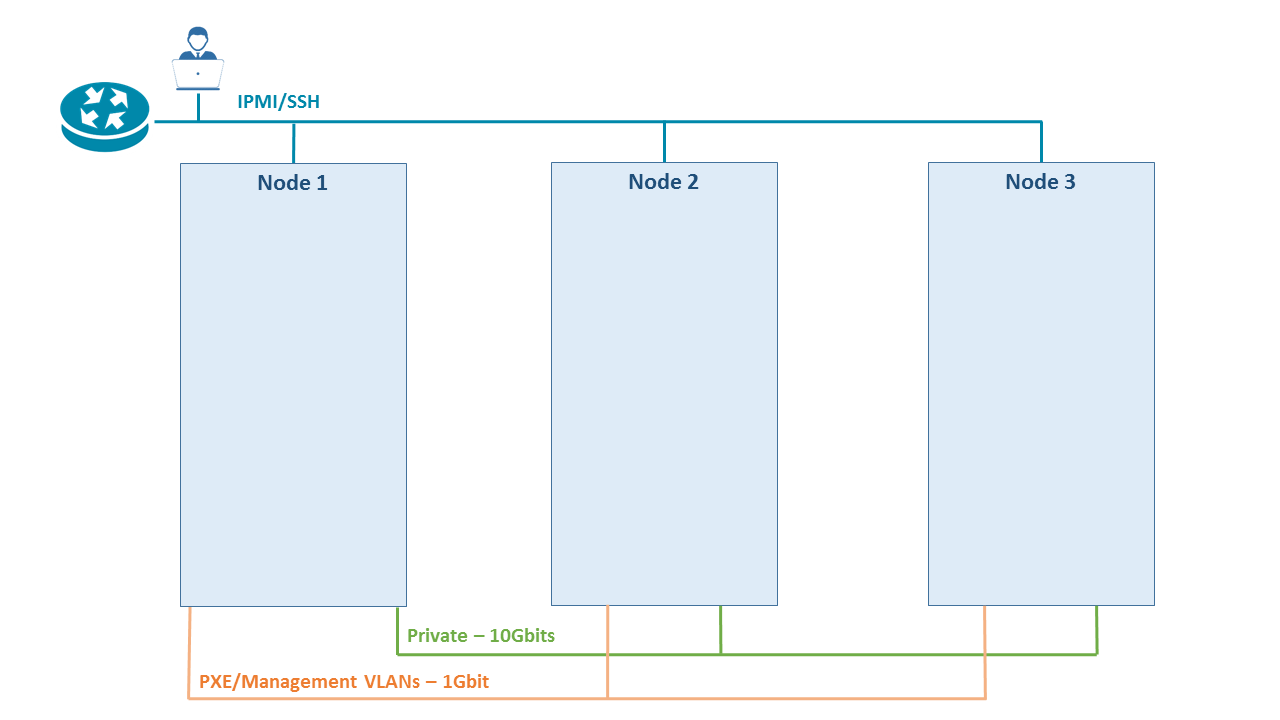

The physical layout of the setup is shown in Figure 1.

Figure 1 - Physical overview

A single baremetal machine (Node 1) running Ubuntu 16.04 serves as a host where the Fuel Master VM and the OpenStack Controller VM run. This server has the following specifications:

- Supermicro Super Server

- 8 x 8GB RAM Memory

- Intel(R) Xeon(R) CPU E5-2687W v3 @ 3.10GHz

- 2 x Intel Corporation I350 1Gb

- Intel Corporation X710 10GbE SFP+

- 1TB Storage

Other two baremetal servers (Node 2 and Node 3) are deployed as OpenStack Compute nodes. Each server node has the following specification:

- Supermicro Super Server

- 8 x 8GB RAM Memory

- 2 x Intel(R) Xeon(R) CPU E5-2630 v3 @ 2.40GHz

- Lights-out management network using IPMI capable interface on each of the nodes

- 2 x Intel Corporation I210 1Gb

- Intel Corporation X520 (82599ES based) 10GbE SFP+

- 1TB Storage

The network setup consists of the following physical networks:

- Lights-out management network using IPMI

- 1Gbit network for PXE booting/Management of Openstack

- 10Gbits network for tenants Private network

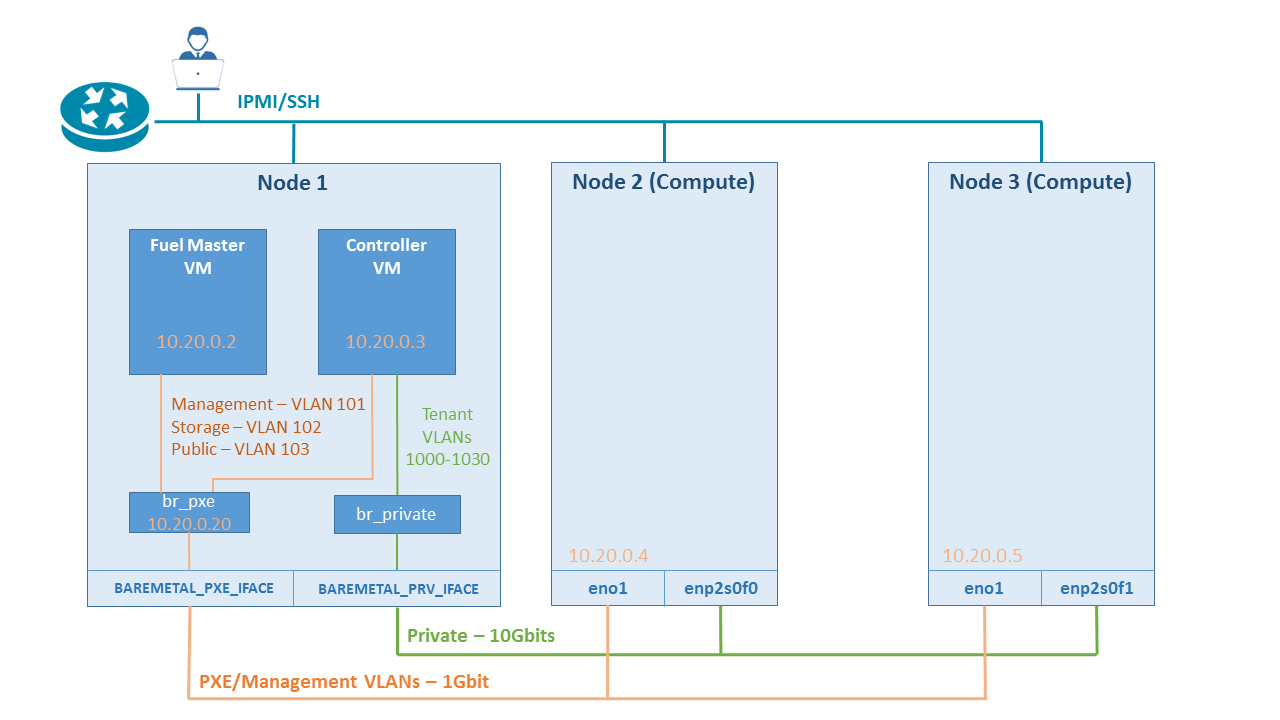

The network functional structure of the deployed OpenStack is shown on Figure 2.

Figure 2 - Logical overview

The software artifacts needed for a successful deployment of this guide are provided in this compressed archive. After registering on the Virtual Open Systems web site, it can be downloaded and should be extracted on Node 1, which hosts the Fuel Master and the OpenStack controller VMs (cfr., above Figure 2).

3. OPNFV environment initialization

Attention!

Virtual Open Systems shall not be responsible for any damage which might be caused to your platform while following the below instructions. While the content of this guide has been tested thoroughly, Virtual Open Systems cannot guarantee for any potential errors which may harm the target deployment platform.

The script network.sh will be used to bring up the virtual network environment on Node 1. It is assumed that the physical network setup is wired as shown on Figure 1.

The following variables in network.sh should be edited to match the interfaces in the setup used. In this case:

BAREMETAL_PXE_IFACE=eno2

BAREMETAL_PRV_IFACE=ens4f0

Run the script:

# ./network.sh

The script always re-initializes the network setup, so if a reset of the environment is needed it can be invoked at any time to restore the configuration.

During the deployment, Fuel uses the public network to ping repositories in order to check the Internet connectivity. To enable this, an iptables rule is added to masquerade the outgoing traffic through an interface on the virtualization host (Node 1) :

# echo 1 > /proc/sys/net/ipv4/ip_forward

# iptables -t nat -A POSTROUTING -s "172.16.16.1/24" -o "e+" -j MASQUERADE

To allow Qemu's user networking ping, ICMP echo request should be enabled. To do so, add the following in the /etc/sysctl.conf file as root:

net.ipv4.ping_group_range = 0 2147483647

Then, apply it by running:

# sysctl -p

Once the network is configured, continue with initializing the virtual machines:

# ./init_vms.sh

The result should be a set of images for the Master and for Controller VM. Note that latter uses 3 hard disk images.

Download in the same folder the Colorado 3.0 Fuel installer iso from the official OPNFV artifacts.

Login or register to access full information

VOSySofficial

VOSySofficial